- Joined

- Dec 21, 2020

- Messages

- 7

- Reaction score

- 1

Hi, i have been working on a tello interface for the past half a year for my finale year project and tommorow we have presentation for the parents and other students and I really want to do a live performence but I still have 1 major problem relating to the frames while using a Yolov3 object detection model.

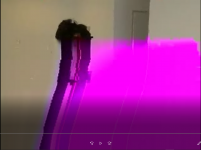

frame gets really corrupted.

This only happens when I am using a new model that I started using lately. The frames get really corrupted with frozen pixels and purple pixels in the bottom. The thing is that when I run only the useage of this model on my laptop's camera is works extremly smoothly. The usage of the model itself is made in a thread to prevent the long time it takes to execute from slowing the main code. I really can't stop using this model so I really need help finding a solution.

Also to note, even if i display a copy of the frame that the drone first gives (without rectangles on it and without touching it just copy from when i get the frame and then display) the frame is still corrupted.

again anything could help thank you so much for your time and have a nice day all !

I am attaching a very beautiful picture of me just so you can get the idea of what I mean by corrupted, thank again <3

In case it matters this is my ffmpeg command -

CMD_FFMPEG = (f'ffmpeg -hwaccel auto -hwaccel_device opencl -i pipe:0 '

f'-pix_fmt bgr24 -s {FRAME_X}x{FRAME_Y} -f rawvideo pipe:1 ')

EDIT:

I already did the presentation.. but if anyone knowns how to fix that i would still love to know!!!

frame gets really corrupted.

This only happens when I am using a new model that I started using lately. The frames get really corrupted with frozen pixels and purple pixels in the bottom. The thing is that when I run only the useage of this model on my laptop's camera is works extremly smoothly. The usage of the model itself is made in a thread to prevent the long time it takes to execute from slowing the main code. I really can't stop using this model so I really need help finding a solution.

Also to note, even if i display a copy of the frame that the drone first gives (without rectangles on it and without touching it just copy from when i get the frame and then display) the frame is still corrupted.

again anything could help thank you so much for your time and have a nice day all !

I am attaching a very beautiful picture of me just so you can get the idea of what I mean by corrupted, thank again <3

In case it matters this is my ffmpeg command -

CMD_FFMPEG = (f'ffmpeg -hwaccel auto -hwaccel_device opencl -i pipe:0 '

f'-pix_fmt bgr24 -s {FRAME_X}x{FRAME_Y} -f rawvideo pipe:1 ')

EDIT:

I already did the presentation.. but if anyone knowns how to fix that i would still love to know!!!

Attachments

Last edited: