- Joined

- Jan 7, 2019

- Messages

- 103

- Reaction score

- 100

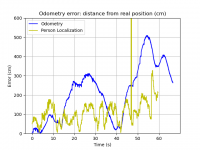

I made a demo video of Tello localization using odometry.

This is position calculation from Tello vgx, vgy velocities provided by Tello state in sdk mode.

The analysis shows how quickly the error can accumulate, so odometry alone is unreliable.

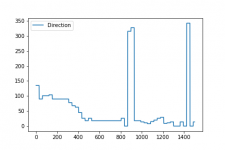

Algorithm: dead-reckoning from motion model developed with Tello Vision Telemetry Lab

github.com

github.com

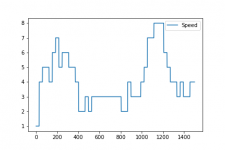

Dataset: telemetry generated with Tello Vision 1D App

play.google.com

play.google.com

Ground truth: mavic mini video by #manuelvenuti

This is position calculation from Tello vgx, vgy velocities provided by Tello state in sdk mode.

The analysis shows how quickly the error can accumulate, so odometry alone is unreliable.

Algorithm: dead-reckoning from motion model developed with Tello Vision Telemetry Lab

GitHub - pgminin/tello-vision-telemetry-lab: A toolkit for the Tello Vision 1D app telemetry visualization, analysis, and data fusion algorithm development

A toolkit for the Tello Vision 1D app telemetry visualization, analysis, and data fusion algorithm development - pgminin/tello-vision-telemetry-lab

Dataset: telemetry generated with Tello Vision 1D App

Tello Vision 1D - Apps on Google Play

Computer Vision and 1D Follow Me for DJI Ryze Tello drone (SDK mode)

Ground truth: mavic mini video by #manuelvenuti

Last edited: